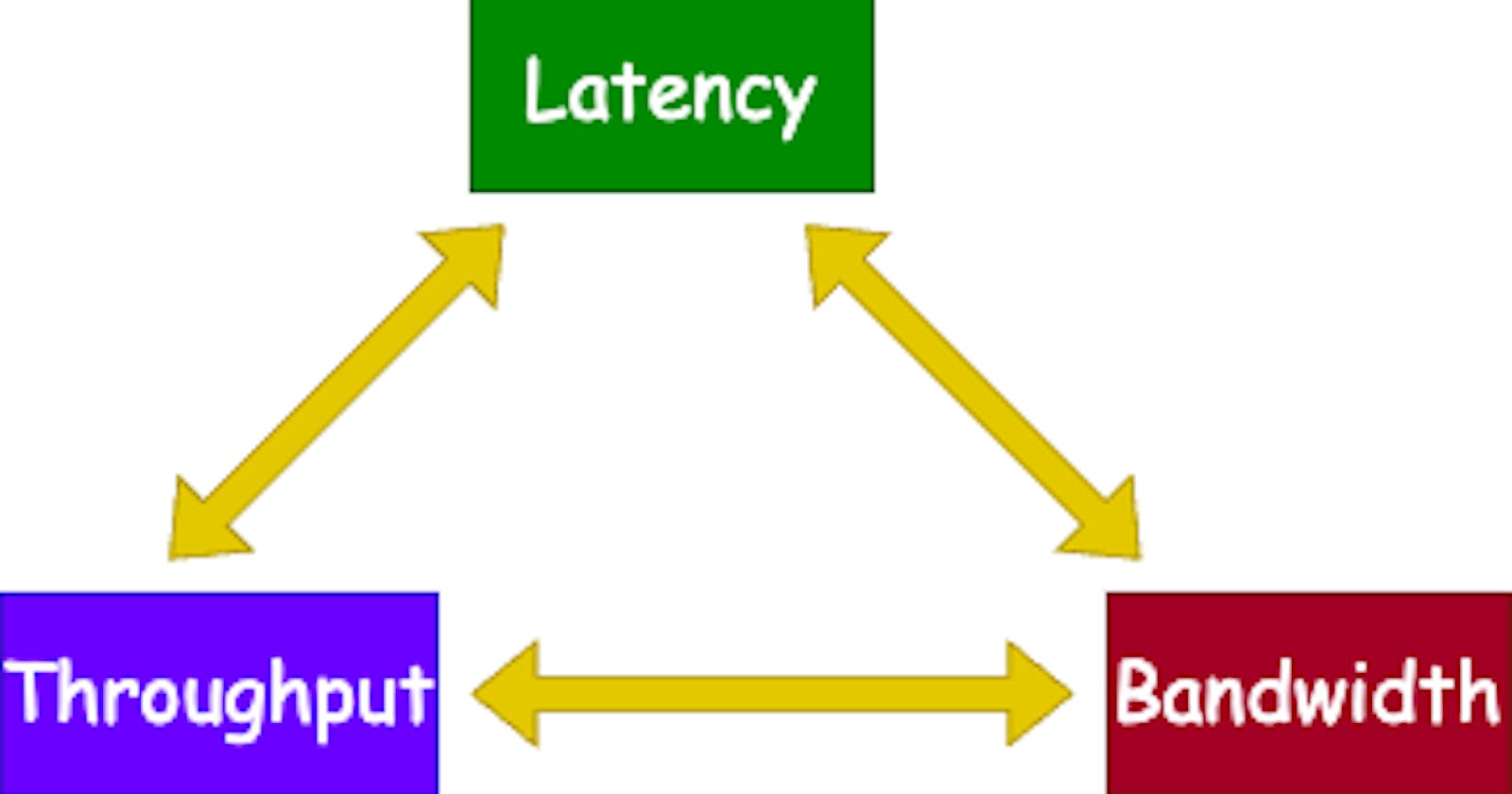

You may have heard the terms latency, throughput, and bandwidth. While these terms may seem interchangeable, but they’re actually very different…

To understand these terms better, Imagine pulling water from a well using a bucket tied with rope.

Now you put the bucket into the well using the pulley and pull it back once it’s filled with water.

The time it takes for the bucket to reach the water’s surface, fill it with water, and pull it back to the top is called latency. In other words, latency is the time it takes for data to travel from one point to another.

On the other hand, the amount of water you can pull up in a given time is called throughput. This is similar to the amount of data that can be transmitted through a network in a given amount of time.

Lastly, the size of the bucket is called bandwidth which is the maximum amount of water that can be pulled up at one time. This refers to the maximum amount of data that can be transmitted at one time.

Let’s imagine we are running a tournament to see who can fill the water container the fastest. We have three participants: Alice, Bob, and John. Each of them has a 5-liter bucket and a 50-liter container to fill. The tournament runs for 5 minutes.

Alice, Bob, and John pulling water from the well, respectively

After the contest, Alice, Bob, and John filled their water container with 30, 25, and 40 liters of water from the well. Even though they had the same bandwidth (5 liters bucket), John had the highest throughput (40 liters of water filled in the container) in the given time (5 minutes).

Conclusion: We aim for higher throughput and lower latency when designing systems.